The Task

I recently worked on a project where the goal was to make a large dataset searchable through natural language. Instead of writing SQL queries or building complex dashboards, I wanted to simply ask questions like:

“Which group saw the biggest change over time?”

“Show me the trend for users who completed the goal.”

“Break this metric down by segment.”

The system would then:

- Understand the question,

- Identify the relevant filters and columns,

- Execute the corresponding data operations,

- And return a clear, summarised answer back — conversationally.

This created a workflow where data analysis felt like talking to a colleague, not operating a database.

And at first — it worked perfectly.

In local development, with:

- A smaller sample of the dataset,

- Fast CPU access,

- No memory constraints,

- And only a few queries per session,

everything ran instantly.

The CSV loaded quickly, pandas responded immediately, and the experience felt smooth.

So I moved forward assuming the CSV structure was “good enough.”

But once the system met real data, the cracks appeared.

As soon as:

- The dataset size increased,

- The number of columns grew,

- More conversations required follow-up queries,

the CSV format became a bottleneck.

The conversational flow slowed down to:

Ask question → wait → wait → wait →

answertimeOut.

Each new question triggered:

- Full file read

- Text parsing

- Type conversion

- Memory reload

- Re-computation

Even simple follow-up questions repeated all that work.

This broke the natural conversational feeling.

The AI was ready to answer — but the data format wasn’t.

I realized the problem was not in the model, not in the logic, and not in the UI —

the data layout itself was holding back the experience.

After seeing the delays pile up, I started evaluating alternatives. I didn’t need a different server, or a different model — I needed a format designed for analytical reads. That’s when I thought about Parquet. It stores data by columns, preserves types, and avoids re-parsing on every query. Exactly what this workflow required.

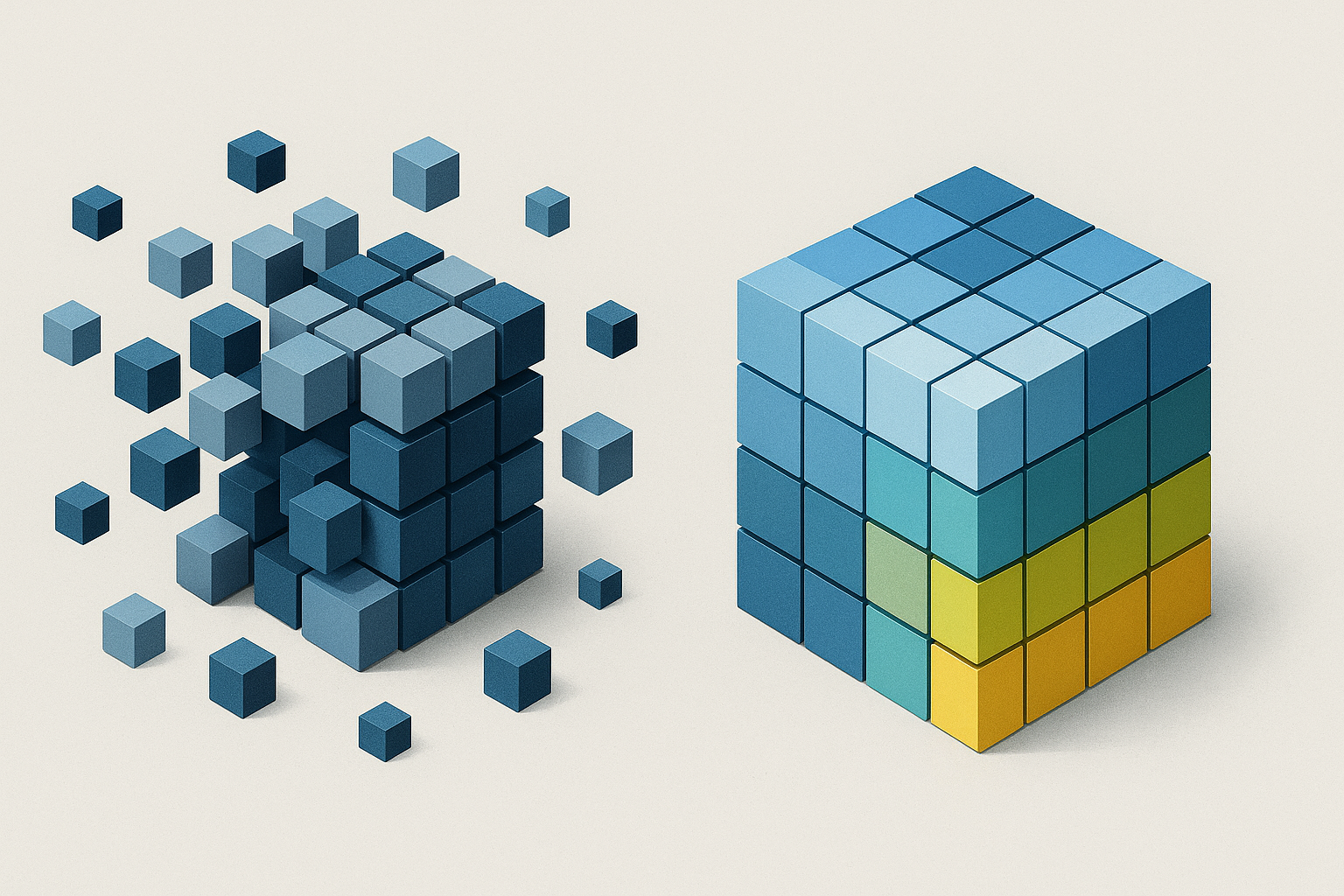

Rethinking How the Data Was Stored

Each conversational request wasn’t asking for full rows.

It was asking about patterns across specific columns:

- “Group this by segment…”

- “Filter this metric…”

- “Calculate the average over time…”

The system was scanning and comparing columns, not reconstructing records.

So the dataset didn’t need to be row-oriented.

It needed to be column-oriented.

That’s when Parquet made sense.

Parquet stores data by column, not by row.

Which means if a query only needs 3 columns, only those 3 columns are loaded — the rest of the dataset remains untouched.

Instead of repeatedly loading and parsing a huge CSV, the system now reads only the data needed for the current question.

This aligns perfectly with how conversational queries behave.

Why Parquet Fits Conversational AI Querying

Conversational data exploration is typically:

- Iterative

- Selective

- Analytical

- Column-focused

A question narrows into a follow-up question — not a full re-scan of the dataset.

CSV forces:

- Full file load

- Repeated text parsing

- High memory use

- Slow response times

Parquet enables:

- Load only the requested columns

- Use binary-native types (dates are dates, numbers are numbers)

- Minimal memory footprint

- Fast, repeated, interactive queries

This is the exact difference between a system that feels conversational

and one that feels like waiting in line.

Converting the Dataset to Parquet

Here is an example workflow used to prepare the dataset:

import pandas as pd

# Load CSV once

df = pd.read_csv("dataset.csv")

# Keep only meaningful columns

columns = [

"user_id",

"timestamp",

"group",

"converted",

"metric_value"

]

df = df[columns]

# Assign correct types (important!)

df["timestamp"] = pd.to_datetime(df["timestamp"])

df["converted"] = df["converted"].astype(bool)

df["metric_value"] = pd.to_numeric(df["metric_value"], errors="coerce")

# Save as Parquet (compressed + column-oriented)

df.to_parquet("dataset.parquet", index=False, compression="snappy")Querying with Parquet in the Conversational Loop

When the AI determines the query intent, it knows which columns are required.

So we load just those:

required_columns = ["group", "metric_value"]

df = pd.read_parquet(

"dataset.parquet",

columns=required_columns

)

result = df.groupby("group")["metric_value"].mean()This is the difference:

| Operation | CSV | Parquet |

|---|---|---|

| Load whole dataset | Always | Only required columns |

| Parsing types each time | Yes | No |

| Suited for repeated conversational queries | ❌ | ✅ |

What Changed After the Migration

After switching to Parquet, the system changed in three key ways:

1. Response times dropped dramatically

Queries returned in fractions of a second, not seconds.

2. The conversation became fluid

Follow-up questions felt natural:

“Now compare this between segments.”

“Now show the last 6 weeks.”

“Now isolate returning users.”

No long waiting. No reloading. No interruption in thought.

3. The model felt smarter — not because the model changed

but because the dataset finally matched the workflow.

Takeaway

If your system:

- Uses AI to translate natural language into data queries

- Works with a large dataset

- Supports iterative, follow-up questioning

Then CSV will eventually slow you down.

It’s not a code problem.

It’s not a compute problem.

It’s a data layout problem.

Moving to Parquet makes the dataset behave more like a column-optimised analytical engine, without requiring a database or infrastructure change.